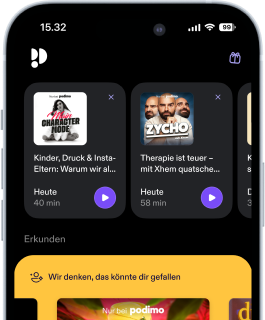

The TWIML AI Podcast (formerly This Week in Machine Learning & Artificial Intelligence)

Podcast von Sam Charrington

Nimm diesen Podcast mit

Mehr als 1 Million Hörer*innen

Du wirst Podimo lieben und damit bist du nicht allein

Mit 4,7 Sternen im App Store bewertet

Alle Folgen

762 FolgenIn this episode, we're joined by Lin Qiao, CEO and co-founder of Fireworks AI. Drawing on key lessons from her time building PyTorch, Lin shares her perspective on the modern generative AI development lifecycle. She explains why aligning training and inference systems is essential for creating a seamless, fast-moving production pipeline, preventing the friction that often stalls deployment. We explore the strategic shift from treating models as commodities to viewing them as core product assets. Lin details how post-training methods, like reinforcement fine-tuning (RFT), allow teams to leverage their own proprietary data to continuously improve these assets. Lin also breaks down the complex challenge of what she calls "3D optimization"—balancing cost, latency, and quality—and emphasizes the role of clear evaluation criteria to guide this process, moving beyond unreliable methods like "vibe checking." Finally, we discuss the path toward the future of AI development: designing a closed-loop system for automated model improvement, a vision made more attainable by the exciting convergence of open and closed-source model capabilities. The complete show notes for this episode can be found at https://twimlai.com/go/742 [https://twimlai.com/go/742].

In this episode, Filip Kozera, founder and CEO of Wordware, explains his approach to building agentic workflows where natural language serves as the new programming interface. Filip breaks down the architecture of these "background agents," explaining how they use a reflection loop and tool-calling to execute complex tasks. He discusses the current limitations of agent protocols like MCPs and how developers can extend them to handle the required context and authority. The conversation challenges the idea that more powerful models lead to more autonomous agents, arguing instead for "graceful recovery" systems that proactively bring humans into the loop when the agent "knows what it doesn't know." We also get into the "application layer" fight, exploring how SaaS platforms are creating data silos and what this means for the future of interoperable AI agents. Filip also shares his vision for the "word artisan"—the non-technical user who can now build and manage a fleet of AI agents, fundamentally changing the nature of knowledge work. The complete show notes for this episode can be found at https://twimlai.com/go/741 [https://twimlai.com/go/741].

In this episode, Jared Quincy Davis, founder and CEO at Foundry, introduces the concept of "compound AI systems," which allows users to create powerful, efficient applications by composing multiple, often diverse, AI models and services. We discuss how these "networks of networks" can push the Pareto frontier, delivering results that are simultaneously faster, more accurate, and even cheaper than single-model approaches. Using examples like "laconic decoding," Jared explains the practical techniques for building these systems and the underlying principles of inference-time scaling. The conversation also delves into the critical role of co-design, where the evolution of AI algorithms and the underlying cloud infrastructure are deeply intertwined, shaping the future of agentic AI and the compute landscape. The complete show notes for this episode can be found at https://twimlai.com/go/740 [https://twimlai.com/go/740].

In this episode, Kwindla Kramer, co-founder and CEO of Daily and creator of the open source Pipecat framework, joins us to discuss the architecture and challenges of building real-time, production-ready conversational voice AI. Kwin breaks down the full stack for voice agents—from the models and APIs to the critical orchestration layer that manages the complexities of multi-turn conversations. We explore why many production systems favor a modular, multi-model approach over the end-to-end models demonstrated by large AI labs, and how this impacts everything from latency and cost to observability and evaluation. Kwin also digs into the core challenges of interruption handling, turn-taking, and creating truly natural conversational dynamics, and how to overcome them. We discuss use cases, thoughts on where the technology is headed, the move toward hybrid edge-cloud pipelines, and the exciting future of real-time video avatars, and much more. The complete show notes for this episode can be found at https://twimlai.com/go/739 [https://twimlai.com/go/739].

Today, we're joined by Fatih Porikli, senior director of technology at Qualcomm AI Research for an in-depth look at several of Qualcomm's accepted papers and demos featured at this year’s CVPR conference. We start with “DiMA: Distilling Multi-modal Large Language Models for Autonomous Driving,” an end-to-end autonomous driving system that incorporates distilling large language models for structured scene understanding and safe planning motion in critical "long-tail" scenarios. We explore how DiMA utilizes LLMs' world knowledge and efficient transformer-based models to significantly reduce collision rates and trajectory errors. We then discuss “SharpDepth: Sharpening Metric Depth Predictions Using Diffusion Distillation,” a diffusion-distilled approach that combines generative models with metric depth estimation to produce sharp, accurate monocular depth maps. Additionally, Fatih also shares a look at Qualcomm’s on-device demos, including text-to-3D mesh generation, real-time image-to-video and video-to-video generation, and a multi-modal visual question-answering assistant. The complete show notes for this episode can be found at https://twimlai.com/go/738 [https://twimlai.com/go/738].